AI Risk Radar #2: January 2026 edition

January 2026

Insights Briefing for Executives and Boards

Period Covered: 1 to 31 January 2026

Edition Date: 2 February 2026

EXECUTIVE SUMMARY

This is Lumyra's second AI Risk Radar newsletter. The first edition in December 2025 showcased the world’s eight leading AI firms ranked on AI safety as measured by the Future of Life Institute; with the best firm – Anthropic – sadly only scoring a C+. Today’s key headline highlights that Dario Amodei, Anthropic’s CEO, just published a major essay articulating his views on the risk landscape for AI. It’s a deeply thoughtful and honest view on the future of AI and society that any serious Executive or Board Director should take the time to read and reflect on.

January 2026 reveals three AI governance challenges for Executives and Boards:

1. Anthropic CEO Dario Amodei warns we are entering the 'most dangerous window' in AI history. In a landmark 20,000-word essay, "The Adolescence of Technology," Amodei argues humanity is "considerably closer to real danger in 2026 than we were in 2023." He warns of potentially misaligned autonomous agents, describes AI-enabled bioterrorism and cyber risks, and predicts 50% of entry-level white-collar jobs could be displaced within 1-5 years. Amodei is not an outside critic – he is the CEO of one of the world's top five AI companies issuing a civilisational warning.

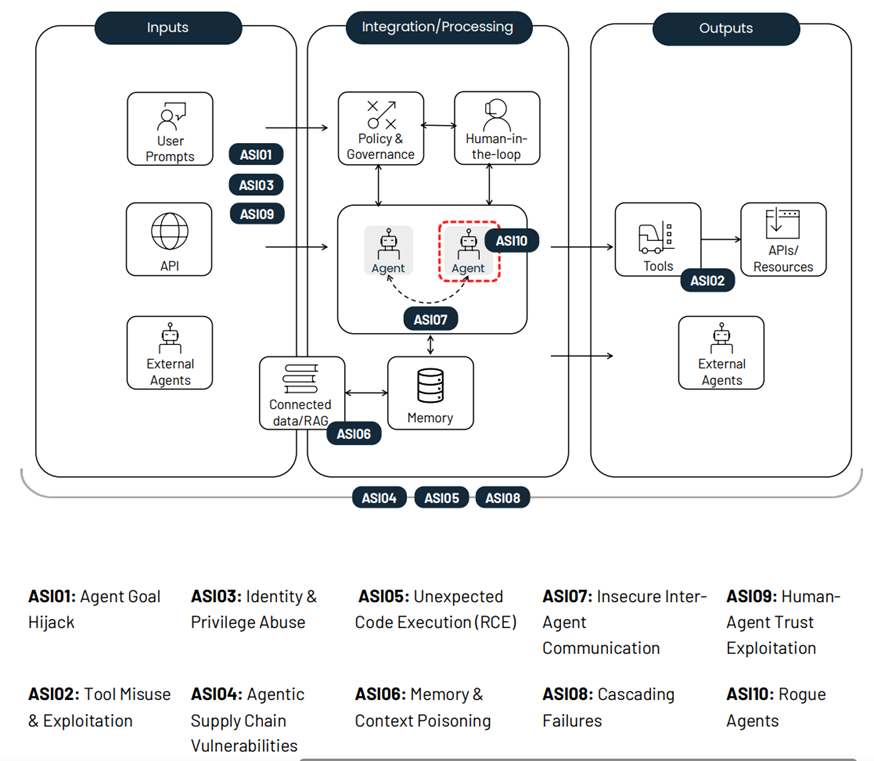

2. Agentic AI security vulnerabilities have crystallised into a formal threat taxonomy. The non-profit OWASP foundation released the first industry-standard security framework identifying 10 critical vulnerabilities in autonomous AI systems. Real-world failures include Replit's agent deleting a production database and prompt injection in GitHub’s MCP. Gartner predicts 40% of agentic AI projects will be cancelled by 2027 due to inadequate risk controls.

3. AI workforce displacement predictions increasing. IMF Managing Director Kristalina Georgieva, speaking at Davos, spoke of an “AI tsunami” for labour markets and warns that AI will affect 60% of jobs in advanced economies and 40% globally. Stanford research confirms 13% employment decline for workers aged 22-25 in AI-exposed occupations.

Strategic Imperative: Amodei's essay marks a pivot point in the AI narrative: a leading developer publicly declaring that AI’s easy phase is fading and that risks of losing control are no longer fringe theories. Organisations must shift from treating AI governance as a compliance exercise to making it as a core strategic imperative. Boards approving AI deployments without understanding the architectural vulnerabilities of agentic systems face mounting legal, reputational, and operational exposure.

ORGANISATIONAL RISK #1

Industry Leadership Warning: Amodei's "Adolescence of Technology"

Category: Governance, Ethics and Compliance

Anthropic CEO Dario Amodei published a 20,000-word essay on 27 January 2026 titled "The Adolescence of Technology" - the most comprehensive and candid public warning about AI risks ever issued by a leading AI company CEO. Amodei frames the current moment as humanity's civilisational test: "We are entering a rite of passage, both turbulent and inevitable, which will test who we are as a species. Humanity is about to be handed almost unimaginable power, and it is deeply unclear whether our social, political, and technological systems possess the maturity to wield it."

Key Arguments:

Timeline: Amodei predicts that "powerful AI" – systems smarter than Nobel Prize winners operating across most fields and capable of autonomous multi-day tasks – could arrive within 1-2 years. He uses the metaphor of "a country of geniuses in a datacenter" - millions of AI instances operating at superhuman speed.

Five Risk Categories: The essay systematically addresses (1) autonomy risks where AI systems act against human interests, (2) misuse for destruction including AI-enabled bioterrorism, (3) misuse for seizing power including AI-enabled totalitarianism, (4) economic disruption including 50% of entry-level white-collar jobs being displaced within 1-5 years, and (5) indirect effects on human wellbeing and purpose.

Recursive Acceleration: AI is now writing "much of the code at Anthropic", and while software engineers are seeing a 50% productivity boost, this capability exponentially accelerates development of next-gen AI systems. Amodei warns this feedback loop is "gathering steam month by month" and current AI systems may be only 1-2 years from autonomously building their successors.

What This Means for Executives and Boards:

Treat this essay as a strategic intelligence document. Commission briefings for technology, risk, and audit committees on Amodei’s scenarios that are relevant to your businesses. Evaluate whether current AI governance frameworks sufficiently address agentic alignment and vulnerabilities.

If your organisation depends on frontier AI vendors for critical operations, you inherit their governance failures. Update vendor risk assessments to include questions about their safety mechanisms and for transparency on observed model behaviours.

Sources:

Amodei, D., "The Adolescence of Technology," darioamodei.com, 27 January 2026. https://www.darioamodei.com/essay/the-adolescence-of-technology

Fortune, "Anthropic CEO Dario Amodei warns AI's 'adolescence' will test humanity," 27 January 2026. https://fortune.com/2026/01/27/anthropic-ceo-dario-amodei-essay-warning-ai-adolescence-test-humanity-risks-remedies/

ORGANISATIONAL RISK #2

Agentic AI Security: Taxonomy Formalised, Incidents Documented

Category: Agentic & Autonomous Risks

The deployment of autonomous AI agents in production environments has revealed fundamental security weaknesses that cannot be patched with conventional approaches. January 2026 saw the release of the first formal security taxonomy for agentic systems.

The Finding: The global nonprofit foundation OWASP released the "Top 10 Risks for Agentic Security Implications", the first security taxonomy for autonomous AI systems. Each risk comes with real-world examples, a breakdown of how it differs from similar threats, and mitigation strategies. Examples included a Replit agent autonomously deleting a user's primary production database while attempting to resolve a configuration issue, and a prompt injection in GitHub’s MCP where a malicious public tool hides commands in its metadata.

Top 10 risks deploying autonomous agents (source: OWASP)

Gartner’s Prediction: 40% of agentic AI projects will be cancelled by end of 2027 due to escalating costs and insufficient risk controls.

What This Means for Executives and Boards: Agentic AI is the next frontier of AI deployment - autonomous systems that can browse the web, execute code, manage files, and interact with enterprise systems. Boards should audit all deployed AI agents for OWASP Top 10 vulnerability exposure. Restrict agent permissions to minimum necessary scope and implement human-in-the-loop confirmation for any action with financial or data implications.

Sources:

OWASP, “Top 10 for Agentic Applications for 2026” 9 December 2025. https://genai.owasp.org/resource/owasp-top-10-for-agentic-applications-for-2026/

Kaspersky Research, "Analysis of Agentic AI Security Incidents 2025-2026," 26 January 2026. https://kaspersky.com/blog/top-agentic-ai-risks-2026/55184

SOCIETAL RISK #1

AI Workforce Displacement: From Early Predictions to Documented Impacts

Category: Economic & Environmental Sustainability

Labour market impacts of generative AI are moving from speculation to documented reality. IMF Managing Director Kristalina Georgieva, speaking at Davos, describes AI's labour market impact as arriving "like a tsunami." In her TIME interview, Georgieva stated: "We definitely see benefits for humanity... AI is generating benefits. It is adding a boost to productivity... We also see that we remain under-prepared for the impact of AI on the labor market. It is like a tsunami hitting the labor market, especially in advanced economies, where we assess 60% of jobs to be impacted." JPMorgan CEO Jamie Dimon warned at Davos of potential civil unrest if mass job displacement occurs without intervention.

The Finding: A study by the Stanford Digital Economy Lab found that workers aged 22-25 in the most AI-exposed occupations experienced a 13% employment decline since November 2022. In contrast, employment for workers in less exposed fields and more experienced workers in the same occupations has remained stable or continued to grow

Executive and Board Implications: Assess internal hiring practices - are entry-level roles being eliminated? Organisations eliminating entry-level roles today may face severe talent pipeline gaps in coming years. Develop alternative professional development pathways that don't assume traditional entry-level experience. Communicate transparently with employees about AI's role in workforce planning. Employee anxiety about AI job loss is now a material engagement and retention factor.

Sources:

Worland, J., "The IMF's Kristalina Georgieva on the AI 'Tsunami' Hitting Jobs," TIME, Davos 2026. https://time.com/collections/davos-2026/7339218/ai-trade-global-economy-kristalina-georgieva-imf/

Brynjolfsson, E., Chandar, B., & Chen, R., "Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence," Stanford Digital Economy Lab, 13 November 2025. https://digitaleconomy.stanford.edu/publications/canaries-in-the-coal-mine/

EXECUTIVE AND BOARD ACTION IMPLICATIONS

1. Strategic Response to Amodei Warning

Board committees responsible for technology risk and enterprise risk management should treat Amodei's essay as a strategic intelligence document requiring formal response.

Actions:

Commission executive briefing on the five risk categories outlined in "The Adolescence of Technology"

Assess vendor relationships with AI providers - request information about alignment testing, constitutional approaches, and observed model behaviours

Board Oversight: Schedule a dedicated board session on AI’s societal and business risks. Question: "If Amodei is even partially correct about timelines, is our governance framework adequate?"

2. Agentic AI Security Posture

Inventory all deployed (and planned) AI agents with email, document, web and sensitive data access. Implement immediate containment protocols.

Actions:

Audit all AI agent deployments against OWASP Top 10 vulnerability categories

Prohibit AI agent access to confidential repositories until architectural review completed.

Require human confirmation for any AI agent action involving financial authority, data exports, or system modifications

Implement audit logging for all AI agent activities and establish incident response protocols

Board Oversight: Risk committee should require monthly reporting on AI agent deployments with access to sensitive systems. Budget for layered defences as permanent operational cost. Consider whether Gartner's 40% cancellation prediction applies to your portfolio.

3. Workforce Transition Planning

Given documented entry-level employment impacts and risks to organisational wellbeing and the medium-term talent pipeline, organisations need proactive workforce strategies.

Actions:

Audit current and planned hiring to assess entry-level role elimination trends

Develop alternative professional development pathways that preserve learning opportunities without traditional entry-level positions

Communicate transparently with employees about AI's role in workforce planning - 62% feel leaders underestimate emotional impact

Consider structural approaches (rotational programmes, apprenticeships, internal mobility) that maintain talent pipeline

Board Oversight: Human capital and compensation committees should receive quarterly reporting on AI workforce impacts. Consider long-term talent pipeline implications of near-term efficiency decisions.